We are fresh off the plane from Silicon Valley, having spent a week meeting with around 30 companies spanning software, hardware, B2C platforms, fintech, healthcare and energy infrastructure. Commensurate with the diffusion of AI across the economy, we are finding opportunities broadening out from within the technology sector. As usual, half of the companies we met with we hold, while the other half we are considering for our watchlist. Our trip has brought about six key insights:

- AI scaling laws – the emergence of post-training and inference scaling laws are driving additional compute requirements.

- Software 1.0 vs. Software 2.0 − traditional software is being completely rearchitected.

- Vertical large language model agents − agents are here, poised to disrupt software-as-a-service business models.

- The importance of data portability − value residing within the data layer of the new technology stack.

- B2C platforms outpace B2B software – intensifying competition and eroding switching cost advantages.

- New technology breakthroughs fuel growth opportunities – synthetic data unlocking advances in robotics.

The major insight over the last seven years in developing new AI systems is that the scale of compute matters − as we scale both compute and data, we train better models, which broadens the end-use cases of AI and enables revenue opportunities across the economy. This trend has held true since 2017 and we believe it has at least another five years to play out, a viewpoint shared by Kevin Scott (CTO of Microsoft), Sam Altman (founder of OpenAI) and Jensen Huang (CEO of Nvidia), despite becoming the subject of investor debate over the past month.

After sitting down with companies across the ecosystem, including Nvidia, we are of the opinion that there is no imminent change in pre-training scaling laws – compute requirements are increasing c.10x every two years with each new vintage of models. This is because of the additional capability scale is bringing to models such as ChatGPT, LLama and Claude.

There are, however, two new scaling laws that are emerging. The first is in post-training. This is essentially making the model a specialist by training it on domain-specific data, historically achieved through human reinforcement learning feedback. More recently, however, AI reinforcement learning has become a second feedback avenue − the surprise for us was hearing that the quality of this training is already on par with human reinforcement learning. We believe that compute requirements for post-training will outstrip pre-training soon.

The second is inference time reasoning, and this has only just begun, unlocked by OpenAI’s newest of models, o-1. The longer the context widow and the longer the model thinks, the more compute is required. We are only in the very early stages of inference deployment across the economy, but we think that inference will ultimately dwarf training as an end market for AI compute.

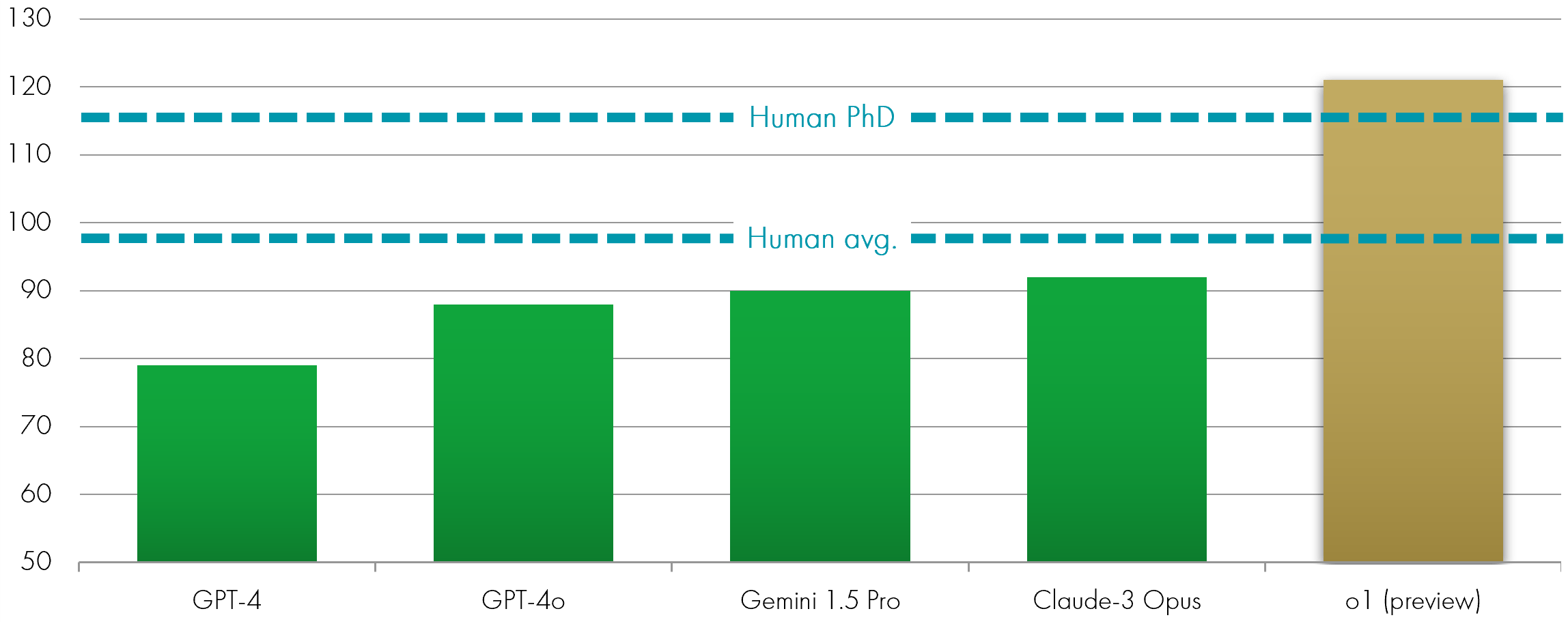

On 12th September 2024 we saw – to little fanfare – the second most defining moment of today’s AI revolution (second only to the launch of ChatGPT – AI’s own lightbulb moment in November 2022). OpenAI released the preview version of a new set of models, o-1, capable of both system 1 and system 2 thinking. In simple terms, these models can now reason, not simply predict − this is ‘system 2 thinking’. We have now reached the stage where AI surpasses PHD-level humans in terms of IQ, and this has given birth to a whole new generation of software.

Model intelligence – IQ test

Software 1.0 vs Software 2.0 - traditional software is being completely rearchitected

We have written at length this year about the threat AI-infused software poses to enterprise software company business models. This threat is now becoming an actuality − traditional software is being completely rearchitected and the transition from software 1.0 to software 2.0 is underway. The ramifications for both software and hardware companies, we believe, are significant.

Software 1.0 is what we have all grown up with and live in every day – thousands of lines of code essentially telling computers how to act in a given situation. This is deterministic software and it is run on CPUs (Intel or AMD) with the most entrenched operating system being Microsoft. Software 2.0 is neural networks – an AI model is embedded within the software, and it decides on the best course of action by itself. The underlying operating system for that is Nvidia and the underlying unit of compute is the GPU, not the CPU.

It is early days but we think in ten years’ time this will be how software is run, on accelerated compute infrastructure. The key unlock has been AI’s ability to automate 50% coding, which we see going to 90% before this time next year.

What does this mean for traditional software? Incumbents have got to pivot, and they have to pivot quickly. We do not think the moats that software-as-a-service business models have enjoyed over the past decade hold anymore − think of Salesforce, Intuit, Adobe, Workday, even Microsoft. These are the poster children of the deterministic era.

The companies that have an advantage from a principles-first perspective building software 2.0 (machine learning) are those that have been building on cognitive architectures from the outset, not retrofitting existing software to work within a new framework.

Think of today’s software in terms of the house you are living – it depends upon the foundations on which it was built. The agentic software coming to market requires a reconfiguration of these foundations. Incumbents at least have the deep pockets required to re-architect, because this will be necessary.

What about for hardware? We think the role of the CPU, and therefore the relevance of dominant providers of CPUs like Intel, will continue to diminish. As we switch to software 2.0, we need to see the world’s $1 trillion installed base of data center infrastructure modernised to accommodate accelerated compute. This is already happening.

Vertical large language model agents − agents are here, poised to disrupt Software-as-a-Service business models.

It was not long ago that Satya Nadella, CEO of Microsoft, heralded in ‘The Age of Copilots’. AI-powered Copilots were proclaimed to transform the way we work, assisting us in tasks from software development to writing speeches. In some instances, we have seen dramatic ensuing productivity gains, but these have for the most part been confined to certain killer use cases – software development and customer service, two areas where Copilots have delivered productivity gains of c.50%, have proved the exception as opposed to the rule.

The Age of Copilots, best thought of as assisting AI software, has transpired to be short-lived. This is because something better has come along. AI is now capable of acting on our behalf; given a task, AI can now go away and complete it, with minimal human intervention. This new breed of software – AI agents – has seen an explosion since September. We have lost count of the number of software companies that have brought AI agents to market since, and we have already reached the point where domain specific agents are hitting 100% accuracy.

Agential software is part of Software 2.0, built on a new cognitive architecture centered around an LLM (large-language model) that decides the control flow of an application in real-time. The LLM is essentially reasoning its way through a software task, much the same as we would reason over what to have for breakfast. It bears no resemblance to the software we all know and use.

Dominant enterprise software companies today we see as the jack-of-all-trades, master of few – they cover too many bases to deliver the highest quality user experience, compounded by the fact that they are purchased by C-suite executives, not the end user. Specialised B2B companies catering to a certain vertical within enterprise software are proving capable of delivering a 10x better experience, with vertical LLM agents emerging as the unlock to make it into enterprise’s budgets. This is based on the early productivity gains we are witnessing, which are staggering.

In customer service, AI agents are now achieving deflections ranging from 50-90% (meaning that calls are only passed through to a human 10% of the time when using the most effective AI agents). Agency, a new company backed by Sequoia and HubSpot Ventures, is deploying agential software to streamline B2B workflows such as CRM and ERP, boosting productivity by 10x for its B2B customers, while Anthropic has released an agent that can even now control your computer via the mouse and keyboard. If we take a step back and think about what this could mean for global productivity, Nvidia estimates the total addressable market for AI productivity gains to equal $100 trillion, the size of the global economy, with a 10% efficiency uplift. Agents are a key part of this.

Can the Salesforces of this world compete with their own AI agents? They are certainly trying, and time will tell, but we see a painful recalibration of pricing models ahead. The pricing structure of this new AI software is based on consumption (work done) as opposed to charging on a per seat basis. As such, this new AI software not only offers a leap forward in productivity but is also vastly more cost effective. The sticking point, however, is that software giants like Salesforce and Adobe rely on seat-based business models and will have to self-disrupt their own high margin business lines to compete effectively.

The importance of data portability − value residing within the data layer of the new technology stack.

As every company adopts an AI strategy, you will have all heard them talk about their respective data advantages. This is particularly true for system of record companies – companies that provide software that serves as an authoritative source for critical data elements within an enterprise. This includes CRM systems like Salesforce, ERP systems like SAP or NetSuite, and HR management systems like Workday.

We believe that the ‘data advantage’ of system of record enterprise software companies is more fragile than most think. It is the customers that own the data, not these system of record enterprise software companies, and this data is housed in Snowflake/Databricks and/or a Hyperscalers’ data lake. This was confirmed to us through

our questioning of companies last week and underscores to us the importance of Snowflake and Databricks’ place in the new technology stack.

It is not ownership of this data that matters here, but portability of this data and ease of integration. We met with some companies whose ‘time to value’ for customers in delivering data analytics & insights was as long as six-to-nine months, due to the heavy lift of integrating >500 data points. On the other hand, platforms built as databases first − with marketing and data analytic solutions layered on top, as opposed to the other way around − are achieving time-to-value for customers in under 48 hours, and this is because of their ability to seamlessly integrate data. In a world where speed of data ingestion is increasingly critical to enable AI applications, we believe that seamless data integrations will be a growing competitive advantage of certain enterprise software platforms.

An exception to our view of data moats diminishing lies in proprietary and real-time data. Companies sitting on top of large bodies of real-time data are in a leading position – these range from B2C platforms like Airbnb and Netflix, to fintech companies like Affirm, Visa and Moody’s, to satellite company Planet labs. The unifying theme of this cohort is a critical mass of proprietary, unique and real-time data.

B2C platforms outpace B2B software − intensifying competition and eroding switching cost advantages

Each platform shift presents significant opportunities for new challengers while posing substantial risks for incumbents. The first major transition − from on-premise software to cloud-based ‘Software as a Service’ (SaaS) − reshaped the software industry and facilitated the rise of cloud-native enterprise software giants such as Salesforce, Workday, and SAP. Over the past two decades, these companies have established dominance by requiring customers to make significant upfront investments to integrate their software into enterprises. Once embedded, they expanded their offerings to deliver additional value. These solutions are now regarded as systems of record, fulfilling critical yet standardised roles within organisations.

Today, the transition to consumption-based software, often referred to as 'Service as a Software’, marks the beginning of a new platform shift − from Software 1.0 to Software 2.0. Companies built on Software 2.0 are disrupting markets by offering products that deliver substantial value at a fraction of traditional costs. For example, Sierra’s AI-powered customer service agent provides support at one-twelfth of the cost of conventional call centres. This is just one of many innovations targeting incumbents, which are increasingly criticised for their high per-seat pricing models.

Recognising this disruptive threat, established software players are pivoting to expand beyond niche functions and into broader organisational solutions. For instance, insights from Silicon Valley reveal that ServiceNow is shifting from back-office operations to the front office, while Salesforce is moving in the opposite direction. These companies are racing to position themselves as the "AI agent of choice" for enterprises. This shift is transforming the enterprise software industry from a long-term investment stronghold into a highly competitive market. It is a race to be top of funnel.

In contrast, leading B2C platforms innovators enjoy economies of scale and dominant market positions, which they share with their customers. By passing on these efficiencies, they significantly reduce the threat of disruption, making it much harder for new entrants to compete on price without compromising quality. Examples of such platforms include Uber, Airbnb, Spotify, Shopify, and Netflix. B2C platform companies poised for a golden era over the next decade are those that, driven by founder-led strategies, successfully pivoted to profitability during 2022–23. These firms have swiftly adopted AI to enhance operational efficiency and are now focused on creating new revenue streams.

By leveraging AI to enhance user engagement and experience, these companies are driving greater platform consumption, attracting more users, and generating additional data to refine their AI models. Unlike seat-based enterprise software, B2C platforms benefit from powerful network effects as they layer on AI solutions, further solidifying their competitive advantage.

New technological breakthroughs fuel growth opportunities − synthetic data unlocking advances in AI research

Breakthroughs in synthetic data generation are fueling the next generation of AI systems – ranging from structured data generated by LLMs, such as OpenAI’s o1 reasoning model, to Open AI’s video generation Sora AI model. These innovations enable companies like OpenAI, Nvidia and Anthropic to break through the data wall, paving the way for the emergence of new types of multimodal AI models. As a reminder, the scale of compute matters for AI systems – as we scale both compute and data, we achieve better models.

While we have already discussed the unhobbling effect of Open AI’s reasoning model, Open AI’s Sora model and subsequent video-generation models from fast followers are having a profound impact on content creation, a historically costly aspect of the creative industry − from film production to social media advertising. In the last two years, diffusion models have democratised still-image generation and editing, opening opportunities to a wider audience. However, video content has largely remained unaddressed until the recent advancements introduced by OpenAI’s Sora. This will have not only a significant impact on the creative industries but many other industries such as robotics.

This groundbreaking AI architecture breakthrough redefines content creation by transforming text prompts into hyper-realistic videos. Using diffusion models and transformer architectures, Sora produces detailed, minute-long videos that capture intricate elements such as lighting, texture, and motion – initial applications include marketing, storytelling, and prototyping demonstrate its vast potential.

Concurrently, advances in robotic AI systems that integrate imitation-based AI frameworks with LLMs have unlocked a new scaling law for robotics. This breakthrough requires a significant volume of unstructured data to train robots, ranging from automobiles to humanoids. Nvidia provides the solution − by leveraging video-generation AI systems initially introduced by Open AI, it enables robotics companies to train systems in the omniverse. By using only a fraction of real-world unstructured data, Nvidia’s approach generates 10 times the video content within the omniverse, facilitating the training of robotics across applications, from humanoids and autonomous vehicles to process automation.

It is difficult to overstate the importance of this breakthrough and its role in accelerating robotic development and deployment across the economy. One notable example is Nvidia’s Project GROOT, which enables robots to process diverse inputs—text, speech, video, and demonstrations—facilitating intuitive human-robot collaboration. Recent examples from Figure AI and Tesla, such as Figure 01 and Tesla’s Optimus, serve as harbingers of the capabilities that are set to evolve rapidly. We anticipate significant further breakthroughs over the next 12 months as scaling laws start to drive progress in robotics.

Videos filmed at a selection of companies we visited:

KEY RISKS

Past performance is not a guide to future performance. The value of an investment and the income generated from it can fall as well as rise and is not guaranteed. You may get back less than you originally invested.

The issue of units/shares in Liontrust Funds may be subject to an initial charge, which will have an impact on the realisable value of the investment, particularly in the short term. Investments should always be considered as long term.

The Funds managed by the Global Innovation Team:

May hold overseas investments that may carry a higher currency risk. They are valued by reference to their local currency which may move up or down when compared to the currency of a Fund. May have a concentrated portfolio, i.e. hold a limited number of investments. If one of these investments falls in value this can have a greater impact on a Fund's value than if it held a larger number of investments. May encounter liquidity constraints from time to time. The spread between the price you buy and sell shares will reflect the less liquid nature of the underlying holdings. Outside of normal conditions, may hold higher levels of cash which may be deposited with several credit counterparties (e.g. international banks). A credit risk arises should one or more of these counterparties be unable to return the deposited cash. May be exposed to Counterparty Risk: any derivative contract, including FX hedging, may be at risk if the counterparty fails. Do not guarantee a level of income.

The risks detailed above are reflective of the full range of Funds managed by the Global Innovation Team and not all of the risks listed are applicable to each individual Fund. For the risks associated with an individual Fund, please refer to its Key Investor Information Document (KIID)/PRIIP KID.

DISCLAIMER

This is a marketing communication. Before making an investment, you should read the relevant Prospectus and the Key Investor Information Document (KIID), which provide full product details including investment charges and risks. These documents can be obtained, free of charge, from www.liontrust.co.uk or direct from Liontrust. Always research your own investments. If you are not a professional investor please consult a regulated financial adviser regarding the suitability of such an investment for you and your personal circumstances.

This should not be construed as advice for investment in any product or security mentioned, an offer to buy or sell units/shares of Funds mentioned, or a solicitation to purchase securities in any company or investment product. Examples of stocks are provided for general information only to demonstrate our investment philosophy. The investment being promoted is for units in a fund, not directly in the underlying assets. It contains information and analysis that is believed to be accurate at the time of publication, but is subject to change without notice. Whilst care has been taken in compiling the content of this document, no representation or warranty, express or implied, is made by Liontrust as to its accuracy or completeness, including for external sources (which may have been used) which have not been verified. It should not be copied, forwarded, reproduced, divulged or otherwise distributed in any form whether by way of fax, email, oral or otherwise, in whole or in part without the express and prior written consent of Liontrust.